ControlNet is a Stable Diffusion model that allows you to duplicate compositions or human poses from reference images.

ControlNet Stable Diffusion users understand how difficult it is to create the exact composition. The images are quite random. You can only: Create a vast number of images and choose your favorite.

ControlNet helps users precisely control their subjects’ position and how they look.

In this article, let’s discover everything about ControlNet.

What is ControlNet Stable Diffusion?

ControlNet is a neural network model that is used to regulate Stable Diffusion models. We can use it in conjunction with any Stable Diffusion model.

Text-to-image is the easiest fundamental use of Stable Diffusion models. It employs word prompts as conditioning to direct image production, resulting in images that match the text prompt.

Besides the text prompt, ControlNet offers another conditioning: Extra conditioning in ControlNet can take several kinds.

Here are two ControlNet’s examples of what this tool can do:

- Edge detection and

- Human pose detection.

Edge Detection Example

ControlNet uses the Canny edge detector to identify outlines of an extra input image. The discovered edges are then recorded as an image as a control map. As additional conditioning to the text prompt, it is supplied into the ControlNet model.

Annotation or preprocessing (in the ControlNet extension) refers to the process of extracting particular information (edges in this example) from the input image.

Human Pose Detection Example

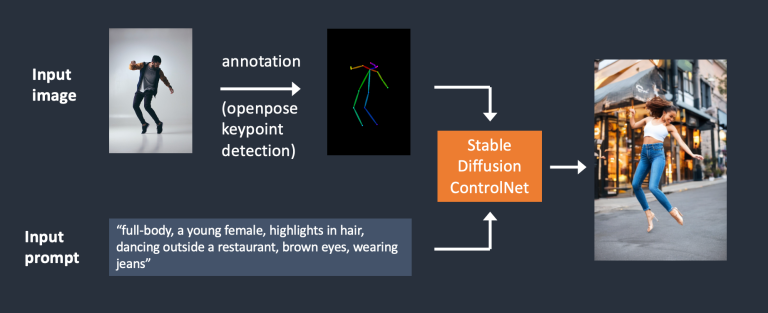

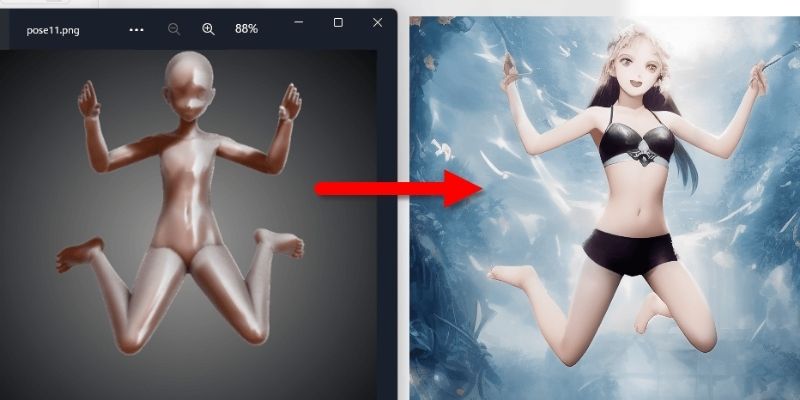

Edge detection is not the only approach to preprocessing an image. Openpose is a rapid human keypoint recognition model that can extract human poses such as hand, leg, and head pose. Consider the following example.

We showed the ControlNet process using OpenPose below. Using OpenPose, keypoints are extracted from the input image and stored as a control map comprising keypoint locations. It is then supplied to Stable Diffusion as additional conditioning with the text prompt. These two conditions are used to create images.

The Difference between Canny edge detection and Openpose:

“The Canny edge detector extracts the edges of the subject and the background. It tends to translate the scene.”

OpenPose only identifies human key points such as head, arm, and leg positions. The image creation is more free, yet it still adheres to the original position.

How To Install ControlNet Stable Diffusion

Let’s go over how to install ControlNet in AUTOMATIC1111, a popular (and free!) Stable Diffusion GUI. For ControlNet, we will use this extension, which is the de facto standard.

You may skip to the next section to learn how to use ControlNet if you already have it installed.

In Google Colab

ControlNet is easy to use with the 1-click Stable Diffusion Colab notebook:

- Check ControlNet in the Extensions section of the Colab notebook.

- AUTOMATIC1111 will begin when you click the Play button. That’s all!

On Windows PC or Mac

You can use ControlNet with AUTOMATIC1111 on either a Windows PC or a Mac. If you haven’t already, install AUTOMATIC1111 by following the guide in this article.

If you already have AUTOMATIC1111 installed, make sure your copy is the newest version.

Also read: Making A Video With Deforum Stable Diffusion

Install ControlNet Extension (Windows/Mac)

- Go to the Extensions page.

- Click the Install from URL button.

- Fill in the URL for the extension’s repository field with the following URL.

https://github.com/Mikubill/sd-webui-controlnet

- Select the Install option.

- Wait for the confirmation message indicating that the extension has been installed.

- Relaunch AUTOMATIC1111.

- Navigate to the ControlNet model page.

- Save all model files with the extension.pth.

(You can start with the openpose and canny models, which are the most popular one if you don’t want to download them all).

- Place the model file(s) in the models directory of the ControlNet extension.

stable-diffusion-webui\extensions\sd-webui-controlnet\models

Restart the AUTOMATIC1111 web interface.

If you successfully installed the extension, you will see a new ControlNet section on the txt2img tab. It should be located directly above the Script drop-down menu.

This means the extension is successfully installed.

Install T2I Adapters

T2I adapters are neural network models, provide additional controls to diffusion model image generation. They are theoretically related to ControlNet but are designed differently.

T2I adapters can be used with the A1111 ControlNet extension. The models must be downloaded from this page. Take the ones with file names like t2iadapter_XXXXX.pth.

Many T2I adapters’ functions overlap with ControlNet models. I’ll quickly go through the first two:

- t2iadapter_color_sd14v1.pth

- t2iadapter_style_sd14v1.pth

Place them in the ControlNet model folder.

stable-diffusion-webui\extensions\sd-webui-controlnet\models

Update the ControlNet extension

ControlNet is a new addition that has been rapidly developed. It is not unusual to discover that your ControlNet copy is out of date.

Only if you run AUTOMATIC1111 locally on Windows or Mac is an update required. The ControlNet addon is always up to date in the site’s Colab notebook.

To make sure that your ControlNet version is the newest one, you can compare it to the newest version number in the ControlNet part of the txt2img website.

Update from Web-UI

The AUTOMATIC1111 GUI is the easiest way to update the ControlNet extension.

- Navigate to the Extensions page.

- Click Check for updates in the Installed tab.

- Wait for a confirmation message.

- Close and restart the AUTOMATIC1111 Web-UI.

Command line

If you’re familiar with the command line, you may use this option to update ControlNet, giving you peace of mind that the Web-UI isn’t doing anything else.

Step 1: Launch the Terminal (Mac) or PowerShell (Windows) app.

Step 2: Open the ControlNet extension folder. (If you installed it someplace else, adjust appropriately.)

cd stable-diffusion-webui/extensions/sd-webui-controlnet

Step 3: Run the following command to update the extension.

git fetch

Maybe you will be interested: Midjourney vs Stable Diffusion: Which Is The Best For You?

Difference Between the Stable Diffusion and ControlNet

Stability AI, the company that created Stable Diffusion, has produced a depth-to-image model. It has many similarities with ControlNet, yet there are significant distinctions.

Similarities

- Both are Stable Diffusion models.

- Both of them employ two conditionings (a preprocessed image and a text prompt).

- Both use MiDAS to estimate the depth map.

Differences

- The depth-to-image model is a version 2 model. ControlNet is compatible with all v1 and v2 models. This is significant since v2 models are typically difficult to use. People struggle to create positive images. The fact that ControlNet may use any v1 model not only added depth conditioning to the v1.5 standard model, but also to hundreds of community-released custom models.

- ControlNet is more adaptable. It can condition with edge detection, posture detection, and other features in addition to depth.

- ControlNet’s depth map is more detailed than depth-to-image’s.

How Does ControlNet Work?

This course would be incomplete until we discussed how ControlNet operates below the hood.

ControlNet works by connecting trainable network modules to various components of the Stable Diffusion Model’s U-Net (noise predictor). The weights of the Stable Diffusion model are locked to remain constant during training. During training, only the associated modules are adjusted.

The model diagram from the research report nicely summarizes everything. The linked network module’s weights are initially all zero, allowing the new model to benefit from the trained and locked model.

Two conditionings are provided with each training picture during training. (1) the text prompt, and (2) the control map, which might include OpenPose keypoints or Canny edges. Based on these two inputs, the ControlNet model learns to create visuals.

Each control approach is trained on its own.

Conclusion

AI image-creation model ControlNet Stable Diffusion provides users with unprecedented control over the model’s output. ControlNet allows users to supply the model with additional input in the form of textual instructions and visual hints. You can fine-tune the final result’s structure, style, and content using this additional data.

If you require a robust and adaptable AI image-generating model, ControlNet Stable Diffusion is an excellent solution. We hope you will find this app helpful for future image generation projects.