Continue readingChatGPT vs. Bing Chat: Which AI Chatbot Should You Choose?

The post ChatGPT vs. Bing Chat: Which AI Chatbot Should You Choose? appeared first on OpenDream.

]]>But I’ve been curious to see if Microsoft’s new Bing Chat lives up to the promise. You can communicate, produce material, make photos, and obtain summary answers to complicated inquiries all in one interface with Bing communicate.

It’s meant to be a considerably more complex version of ChatGPT, so I’m curious how their features compare.

Here are the key differences I observed while comparing ChatGPT to Bing AI Chat.

1. ChatGPT vs. Bing Chat at a Glance

I’ll go through some of the key differences between ChatGPT and Bing Chat in greater detail in the next parts, but here’s a brief comparison.

| Bing Chat |

ChatGPT |

|

| Language model | GPT-4 from OpenAI | GPT-3.5 from OpenAI (ChatGPT Plus: GPT-4) |

| Platform | Linked to Microsoft’s search engine | Independent website or API; iOS app |

| Internet access | Can do web searches and provide connections and suggestions | Users of ChatGPT Plus can use the browsing tool (provided by Bing). |

| Image generation | DALLE can generate creative material, including images. | Can generate text only |

| Best used as | A research supporter | A personal assistant |

| Usage limits | Up to 20 talks/session and up to 200 total chats/day. | ChatGPT Plus customers receive 25 GPT-4 messages every 3 hours and have unlimited talks per day. |

| Pricing | Free | Free;

ChatGPT Plus: $20/month |

2. ChatGPT vs. Bing Chat: What are the differences?

Here are some differences between ChatGPT vs. Bing Chat

2.1. Chat

You can chat with Bing right from the sidebar, without navigating to the Bing Chat website. The most stunning feature is that when you’re on a website with a lot of information, it contextually answers queries from that page.

Looking for the important points from a lengthy article? Done. Do you need to grasp a complicated idea in layman’s terms? Done.

This can be rather useful if you require AI assistance while conducting information-dense online research.

You can still get this with ChatGPT Plus— paste a link into it and ask it to summarize the information—but it isn’t smoothly integrated into the same page you’re on.

Also Read: Dan 11.0 ChatGPT Prompt: How To Activate or Jailbreak ChatGPT

The difference is simply determined by how you like to look for information. Bing AI, on the other hand, is hard to match for a personalized AI experience assuming you don’t’ mind completing your research in Microsoft Edge.

2.2. Insights

Bing Chat bot offers an Insights option that gives you additional information about the website you’re visiting. It displays items such as a Q&A, important points, page themes, and linked articles.

You can also obtain a fast overview of metrics about the website you’re seeing (such as domain name, hosting service, and even traffic rank) if you scroll all the way down. ChatGPT lacks this feature because it is primarily focused on text creation.

2.3. Composition

You must be very clear in your instructions using ChatGPT; otherwise, the result will be ambiguous and unlikely to match your needs.

By providing ready-made solutions, Bing’s Composition tool directs you further toward the desired outcome. Within the text box, you may create your prompt, choose the tone you want, the type (blog post, email, etc.), and the length. That makes it feel more like an AI writing generator.

Maybe you will be interested: How To Use GPT 4 For Free: 4 Ways Easy To Acess

If you’re not sure how to build prompts that will get you what you want, you may just tell ChatGPT these same things in your prompt, and it’ll do a good job.

3. Final Thought: Bing Chat vs. ChatGPT: Which One Should You Choose?

Both ChatGPT and Bing chat bot are useful writing tools and informative chatbots—which one is preferable depends on your specific use case.

Bing Chat is your best choice if you want a sophisticated research tool that’s integrated with a web browser (and excels at in-depth page insights, image production, and quoting credible sources).

If you want an AI-powered personal assistant that can conduct activities for you across many apps, ChatGPT is the clear winner thanks to its plugins.

What is the greatest approach to finding out what works for you? Try both of them out.

The post ChatGPT vs. Bing Chat: Which AI Chatbot Should You Choose? appeared first on OpenDream.

]]>Continue readingHow To Use Bing Image Creator?

The post How To Use Bing Image Creator? appeared first on OpenDream.

]]>How To Use Bing Image Creator

Log In To Bing Image Creator

Unlike Bing Chat, Bing Image Creator doesn’t require Microsoft Edge. To use Bing, go to Bing.com/Create and select Join & Create to log in to your Microsoft account.

Input Your Prompt

Enter your image description you want Bing to generate. Be as specific as possible while using an AI chatbot to obtain the most accurate result.

After entering your prompt in the text box, click Create.

For example, I input the prompt: “Image of a swan laying in a royal living room with gold accents”. Then I’ll click Create and wait for my image to be created.

Check Your Results

When your images are generated, you can review the results. For each question, DALL-E and Bing AI Image Creator will normally offer 4 created images.

They aren’t always amazing, because free AI image generators aren’t always smart enough to generate completely lifelike images, so you could notice some inaccuracies in a person’s fingers or eye placement, or the keys on a computer keyboard, for example.

Download Your Final Result(S)

Download the final result if you’re satisfied with it, then you can Share, Save it to your account, Download, or send Feedback. You can download one, all, or none of the final results.

FAQ

Can I Create Images Using The New Bing Chat?

You can use Microsoft Bing Image Creator in two ways. You can create images by navigating to Bing.com/Create, as described above, or creating images directly from Bing Chat.

Here’s how to request an image directly from the chat window:

- Launch Microsoft Edge

- Visit Bing.com

- Select Chat

Write your prompt; it doesn’t have to start with a sentence like “create an image” or “generate an image.” Bing Chat usually detects your purpose.

Bing Chat can generate images in every discussion style, whether Creative, Balanced, or Precise is selected.

Using Bing Chat, you can ask follow-up questions to have Bing fix your image. Bing asks questions like: “Can you make the monkey wear a hat?” or “Can you change the Vespa color to blue?”

How To Write Prompts To Create Images Using Ai?

The more explicit your suggestions are, the better your final results are; consider the prompt to be a comprehensive description of the image you have in mind. Include adjectives, nouns, and verbs to describe the image and the person’s action, style.

If you say, “Create a photo of…”, you will get a different outcome than if you say, “Create a cartoon, a painting, or a 3D render of…”, therefore image style is the key.

Bing’s Image Creator suggests the following structure for your prompts: Adjective + Noun + Verb + Style.

You may also define the style using alternative names, such as impressionism, cubism, abstract, and so on.

Also Read: 7 Best Midjourney Alternatives to Try in 2023 (Free&Paid)

Do I Own Ai-Generated Images?

According to the United States Copyright Office (USCO), AI-generated images are not protected under current copyright rules since they are not created by humans.

AI image generators have sparked debate since they are AI bots educated on images discovered online, images made by someone else. While the art you generated with an image maker tool is unique, it was inspired by the work of millions of artists on the internet.

This decision is subject to change when the USCO performs hearing sessions during 2023 to further investigate the issue and make appropriate improvements.

Is Bing Image Creator Free?

Bing Image Creator is now free, but you can pay for more boosts if you run out. Boosts are similar to credits. It means each prompt you input to make a picture will cost one boost.

When you initially start using the Image Creator, you will have 25 boosts, however this has subsequently been upped to 100.

When you run out of boosts, the Bing Image Creator will take longer to generate images. It can take up to five minutes instead of 10-30 seconds.

Microsoft formerly refilled boosts on a weekly basis, but has recently converted to a daily basis. Users can also redeem Microsoft rewards in return for further bonuses.

Is Bing Image Creator The Same As Dall-E 2?

Bing Image Creator and DALL-E 2 are not the same. Microsoft is embedding a more powerful version of the AI art generator into its image creator, as it did with GPT-4 in Bing Chat.

Though the same question will not provide the same results twice, you can compare the final results created using DALL-E 2 and Bing Image Creator.

Aside from stylistic differences, the Bing images have more detail and color than the DALL-E 2 images.

Maybe you will be interested: Everything You Need to Know About Googles Bert

Is There A Waitlist To Use The Bing Image Creator?

There is currently no backlog to use Bing Image Creator. All you have to do is sign in with your Microsoft account and access it.

Conclusion

You’ve seen how exciting Bing Image Creator can be, and how simple it is to create beautiful AI art. Your imagination is the only limit. So, give Bing a go and enjoy, then share the images that come to life with your words.

The post How To Use Bing Image Creator? appeared first on OpenDream.

]]>Continue readingWhat is Bard Google? Here Is What You Might Not Know

The post What is Bard Google? Here Is What You Might Not Know appeared first on OpenDream.

]]>While it performs a lot of what ChatGPT does, Google has made a substantial investment in this field and has already made some significant upgrades to the tool that push it beyond what ChatGPT can achieve.

Let’s learn everything to know about Bard Google in this post.

What Is Bard Google?

Bard Google, like ChatGPT, is a conversational AI chatbot that can create any kind of text. You can ask it any questions you want, as long as your questions don’t contain a violating subject, Bard will respond. Although Bard has not yet officially supplanted Google helper, it’s a significantly more capable AI helper.

How Does Google Bard Work?

A “lightweight” version of LaMDA powers Bard.

LaMDA is a big language model trained on datasets derived from public discourse and online data.

There are two critical aspects of the training mentioned in the accompanying study article, which you can obtain as a PDF here: LaMDA stands for Language Models for Dialog Applications (the abstract may be found here).

- A. Security: The model achieves a level of security by being tuned using data annotated by crowd workers.

- B. Groundedness: LaMDA is evaluated by external knowledge sources (through information retrieval).

According to the LaMDA research:

“…factual grounding entails letting the model onsult external knowledge sources like an information retrieval system, a language translator, and a calculator.”

We use a groundedness metric to define factuality, and we demonstrate that our technique allows the model to create replies grounded in known sources rather than ones that just seem reasonable.”

LaMDA results is evaluated based on 3 metrics:

- Sensibility: A measure of whether or not a response makes sense.

- Specificity: Determines if the response is generic/vague or contextually particular.

- Interestingness: This statistic assesses whether LaMDA’s responses are insightful or arouse interest.

All 3 criteria were evaluated by crowdsourced raters, and the results were put back into the system to help it improve further.

According to the LaMDA study report, crowdsourcing evaluations and the system’s capacity to fact-check using a search engine were helpful strategies.

Google researchers stated:

“Crowd-sourced data is an effective tool to drive important additional gains.”

We also discover that external APIs (such as an information retrieval system) provide a method to greatly enhance groundedness, which we define as “the extent to which a generated response includes claims, which can be referenced and checked against a known source.”

Maybe you will be interested: GPT3 Playground Overview: Key Features & Limitations And More

How to Use Bard Google?

Go to bard.google.com to use Google Bard. It needs you to check in using your Google account, like with all of Google’s products.

You’ll also need to agree to the terms of service to use Google Bard right away. Using Bard is the same as using ChatGPT, all you need to do is input your prompt in “Enter a prompt here”, Bard then will return your answer.

What is Google Bard Used for?

Bard AI Google offers a wide range of applications. With AI chatbots, especially Bard, the sky’s the limit. It’s an excellent tool for brainstorming, outlining, and collaborating. It has surely been used to create essays, articles, and emails, as well as creative tasks such as creating stories and poetry.

And, as of lately, we can use Bard to develop and debug code.

However, as Google advises, using Bard’s text output as a final result is not recommended. It’s best to use Bard’s text generation as a starting point only.

What Languages is Bard Available in?

Google revealed at Google I/O that Bard will support Japanese and Korean, and it’s on schedule to support 40 additional languages in the near future.

Does Google Include Images In Its Answers?

Yes, Bard was modified in late May to incorporate photos in its responses. The photos are collected from Google and displayed when you ask a question that would be better answered with a photo.

For example, when I asked Bard, “What are some of the best places to visit in New York?” it responded with a list of several sites, each with a photo.

Google Bard Limitations and Controversies

Google Bard, unlike ChatGPT, offers full internet access. It can make references to contemporary events and present surroundings. That doesn’t imply that all of the information is correct.

According to Google, Bard is prone to hallucinations.

For example, I asked Bard who the editors of Digital Trends were, which he did not know, despite the fact that all of that information was clearly visible on our About page.

Bard chatbot made a mistake in addressing a question regarding the new findings of the James Webb Space Telescope when it was initially exhibited publicly on February 6, 2023. It claimed to be the first to photograph an exoplanet outside of our solar system, however this occurred several years earlier.

The fact that Google Bard showed this incorrect information with such confidence drew harsh criticism, citing parallels with some of ChatGPT’s flaws. In reaction, Google’s stock price dropped several points.

The most significant restriction of Bard is its inability to preserve talks. You may export them, but they’re gone when you shut the window.

Maybe you will be interested: How To Use GPT 4 For Free: 4 Ways Easy To Acess

Is Google Bard Better than ChatGPT?

Both Google Bard and ChatGPT construct chatbots using natural language models and machine learning, but each provides a unique set of functionality.

- ChatGPT is fully based on data that was primarily gathered up to 2021 at the time of writing, but Google Bard can use up-to-date information for its replies and may freely search the internet when asked.

- Because it’s directly connected to the internet, you can also perform similar searches by clicking the “Google it” button. That is one significant advantage Bard has over ChatGPT.

- ChatGPT, on the other hand, is primarily concerned with conversational inquiries and responses. It excels at creative work as well. According to Google, ChatGPT can answer more inquiries in natural language at the time.

- According to a recent investigation, Bard was trained using ChatGPT data without authorization. Google has rejected this charge, while CEO Sundar Pichai said that Bard would be improved shortly to compete with ChatGPT, referring to it as a “souped-up Civic compared to ChatGPT.”

This was before the Google I/O announcements, so we’ll have to wait and see how the upgrades compare in practice.

- Most significantly, ChatGPT can preserve all of your talks, which are neatly categorized into “conversations” on the sidebar.

- As of present, Bard may export but not store your conversations. I appreciate Bard’s drafting capability, however whether you pick Chat GPT or Bard Google for long-term usage depends on your needs and circumstances.

Conclusion

Google’s latest AI model, Bard Google, has the potential to be a key tool in the creative field. It can generate high-quality content in several forms and genres, assisting authors in their creative endeavors.

However, it’s critical to recognize that Bard cannot replace human inventiveness. So, it would be better if you just use Bard to support your work, not totally lean on it.

The post What is Bard Google? Here Is What You Might Not Know appeared first on OpenDream.

]]>Continue readingControlnet Stable Diffusion Best Practices

The post Controlnet Stable Diffusion Best Practices appeared first on OpenDream.

]]>ControlNet Stable Diffusion users understand how difficult it is to create the exact composition. The images are quite random. You can only: Create a vast number of images and choose your favorite.

ControlNet helps users precisely control their subjects’ position and how they look.

In this article, let’s discover everything about ControlNet.

What is ControlNet Stable Diffusion?

ControlNet is a neural network model that is used to regulate Stable Diffusion models. We can use it in conjunction with any Stable Diffusion model.

Text-to-image is the easiest fundamental use of Stable Diffusion models. It employs word prompts as conditioning to direct image production, resulting in images that match the text prompt.

Besides the text prompt, ControlNet offers another conditioning: Extra conditioning in ControlNet can take several kinds.

Here are two ControlNet’s examples of what this tool can do:

- Edge detection and

- Human pose detection.

Edge Detection Example

ControlNet uses the Canny edge detector to identify outlines of an extra input image. The discovered edges are then recorded as an image as a control map. As additional conditioning to the text prompt, it is supplied into the ControlNet model.

Annotation or preprocessing (in the ControlNet extension) refers to the process of extracting particular information (edges in this example) from the input image.

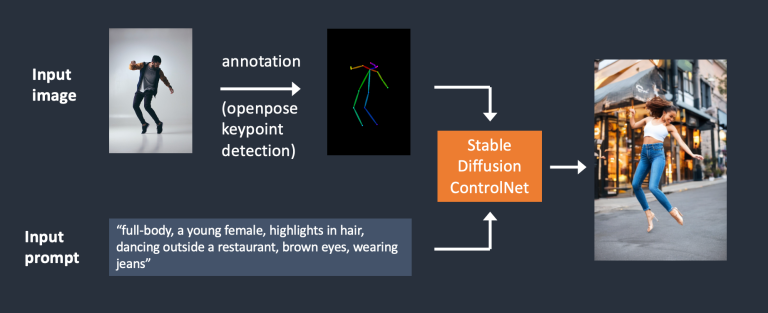

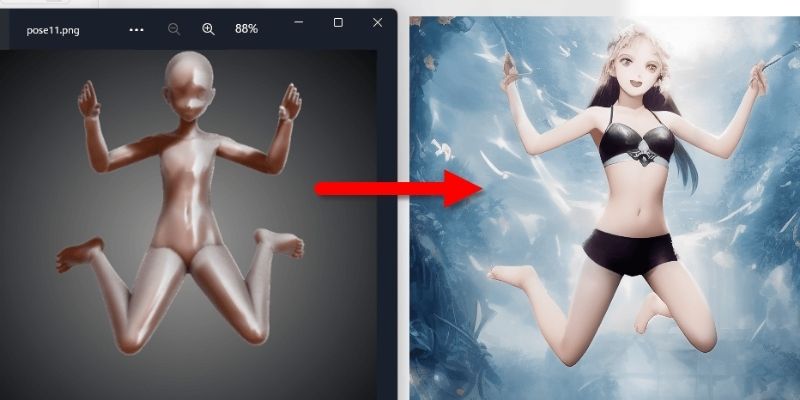

Human Pose Detection Example

Edge detection is not the only approach to preprocessing an image. Openpose is a rapid human keypoint recognition model that can extract human poses such as hand, leg, and head pose. Consider the following example.

We showed the ControlNet process using OpenPose below. Using OpenPose, keypoints are extracted from the input image and stored as a control map comprising keypoint locations. It is then supplied to Stable Diffusion as additional conditioning with the text prompt. These two conditions are used to create images.

The Difference between Canny edge detection and Openpose:

“The Canny edge detector extracts the edges of the subject and the background. It tends to translate the scene.”

OpenPose only identifies human key points such as head, arm, and leg positions. The image creation is more free, yet it still adheres to the original position.

How To Install ControlNet Stable Diffusion

Let’s go over how to install ControlNet in AUTOMATIC1111, a popular (and free!) Stable Diffusion GUI. For ControlNet, we will use this extension, which is the de facto standard.

You may skip to the next section to learn how to use ControlNet if you already have it installed.

In Google Colab

ControlNet is easy to use with the 1-click Stable Diffusion Colab notebook:

- Check ControlNet in the Extensions section of the Colab notebook.

- AUTOMATIC1111 will begin when you click the Play button. That’s all!

On Windows PC or Mac

You can use ControlNet with AUTOMATIC1111 on either a Windows PC or a Mac. If you haven’t already, install AUTOMATIC1111 by following the guide in this article.

If you already have AUTOMATIC1111 installed, make sure your copy is the newest version.

Also read: Making A Video With Deforum Stable Diffusion

Install ControlNet Extension (Windows/Mac)

- Go to the Extensions page.

- Click the Install from URL button.

- Fill in the URL for the extension’s repository field with the following URL.

https://github.com/Mikubill/sd-webui-controlnet

- Select the Install option.

- Wait for the confirmation message indicating that the extension has been installed.

- Relaunch AUTOMATIC1111.

- Navigate to the ControlNet model page.

- Save all model files with the extension.pth.

(You can start with the openpose and canny models, which are the most popular one if you don’t want to download them all).

- Place the model file(s) in the models directory of the ControlNet extension.

stable-diffusion-webui\extensions\sd-webui-controlnet\models

Restart the AUTOMATIC1111 web interface.

If you successfully installed the extension, you will see a new ControlNet section on the txt2img tab. It should be located directly above the Script drop-down menu.

This means the extension is successfully installed.

Install T2I Adapters

T2I adapters are neural network models, provide additional controls to diffusion model image generation. They are theoretically related to ControlNet but are designed differently.

T2I adapters can be used with the A1111 ControlNet extension. The models must be downloaded from this page. Take the ones with file names like t2iadapter_XXXXX.pth.

Many T2I adapters’ functions overlap with ControlNet models. I’ll quickly go through the first two:

- t2iadapter_color_sd14v1.pth

- t2iadapter_style_sd14v1.pth

Place them in the ControlNet model folder.

stable-diffusion-webui\extensions\sd-webui-controlnet\models

Update the ControlNet extension

ControlNet is a new addition that has been rapidly developed. It is not unusual to discover that your ControlNet copy is out of date.

Only if you run AUTOMATIC1111 locally on Windows or Mac is an update required. The ControlNet addon is always up to date in the site’s Colab notebook.

To make sure that your ControlNet version is the newest one, you can compare it to the newest version number in the ControlNet part of the txt2img website.

Update from Web-UI

The AUTOMATIC1111 GUI is the easiest way to update the ControlNet extension.

- Navigate to the Extensions page.

- Click Check for updates in the Installed tab.

- Wait for a confirmation message.

- Close and restart the AUTOMATIC1111 Web-UI.

Command line

If you’re familiar with the command line, you may use this option to update ControlNet, giving you peace of mind that the Web-UI isn’t doing anything else.

Step 1: Launch the Terminal (Mac) or PowerShell (Windows) app.

Step 2: Open the ControlNet extension folder. (If you installed it someplace else, adjust appropriately.)

cd stable-diffusion-webui/extensions/sd-webui-controlnet

Step 3: Run the following command to update the extension.

git fetch

Maybe you will be interested: Midjourney vs Stable Diffusion: Which Is The Best For You?

Difference Between the Stable Diffusion and ControlNet

Stability AI, the company that created Stable Diffusion, has produced a depth-to-image model. It has many similarities with ControlNet, yet there are significant distinctions.

Similarities

- Both are Stable Diffusion models.

- Both of them employ two conditionings (a preprocessed image and a text prompt).

- Both use MiDAS to estimate the depth map.

Differences

- The depth-to-image model is a version 2 model. ControlNet is compatible with all v1 and v2 models. This is significant since v2 models are typically difficult to use. People struggle to create positive images. The fact that ControlNet may use any v1 model not only added depth conditioning to the v1.5 standard model, but also to hundreds of community-released custom models.

- ControlNet is more adaptable. It can condition with edge detection, posture detection, and other features in addition to depth.

- ControlNet’s depth map is more detailed than depth-to-image’s.

How Does ControlNet Work?

This course would be incomplete until we discussed how ControlNet operates below the hood.

ControlNet works by connecting trainable network modules to various components of the Stable Diffusion Model’s U-Net (noise predictor). The weights of the Stable Diffusion model are locked to remain constant during training. During training, only the associated modules are adjusted.

The model diagram from the research report nicely summarizes everything. The linked network module’s weights are initially all zero, allowing the new model to benefit from the trained and locked model.

Two conditionings are provided with each training picture during training. (1) the text prompt, and (2) the control map, which might include OpenPose keypoints or Canny edges. Based on these two inputs, the ControlNet model learns to create visuals.

Each control approach is trained on its own.

Conclusion

AI image-creation model ControlNet Stable Diffusion provides users with unprecedented control over the model’s output. ControlNet allows users to supply the model with additional input in the form of textual instructions and visual hints. You can fine-tune the final result’s structure, style, and content using this additional data.

If you require a robust and adaptable AI image-generating model, ControlNet Stable Diffusion is an excellent solution. We hope you will find this app helpful for future image generation projects.

The post Controlnet Stable Diffusion Best Practices appeared first on OpenDream.

]]>Continue reading7 Best AI Girlfriend Generators 2023 – Key Features, Pros&Cons and Pricing

The post 7 Best AI Girlfriend Generators 2023 – Key Features, Pros&Cons and Pricing appeared first on OpenDream.

]]>These applications simulate human conversation and can provide friendship and entertainment. This article will list down top 7 AI girl generators available on Android and iOS in 2023. So, take a deep breath and immerse yourself in a virtual world.

7 Best AI Girl Generators 2023

We tested several AI girlfriend applications and found the 7 best:

1. AI Girlfriend

AI Girlfriend is a well-known virtual girlfriend program that you can get from the Apple program Store. It’s designed to provide users with a realistic AI-powered girlfriend experience.

Using powerful natural language processing (NLP) and machine learning (ML) techniques, this program replicates human dialogue and gives a tailored experience.

The AI Girlfriend app is very clear; users may customize their girlfriend’s appearance and personality. They can also communicate with their virtual girlfriends and even go on virtual dates with them.

Features

- Provides you with a friendly specialist to assist you in improving your mental health.

- Reduces stress and makes you happier

- Private and secure available at any time and from any location

- Allows you to share your secrets, hopes, dreams, and anxieties anonymously.

- Provides personality tests that will push you to the limit.

- Through everyday chats, it learns about you and evolves its personality and interests appropriately.

- It allows you to communicate with a buddy who is always there for you.

- Allows you to assist your AI in learning new skills and becoming a better buddy.

- When you’re feeling sad or nervous, this person will be your buddy and cheerleader.

- Allows you to discover your inner self with iGirl.

- Download and use are both free.

- The app includes Terms of Service and a Privacy Policy.

Pros & Cons

Pros:

- The AI’s voice is extremely lifelike and coherent.

- It possesses a diverse set of social abilities.

- It enables users to conduct romantic and even nasty things.

Cons:

- Only available on iOS.

2. Yander AI Girlfriend Simulator

Yander AI Girlfriend Simulator is a popular virtual girlfriend app on the Google Play Store. The software is designed to resemble a Yandere girlfriend, a popular anime and manga cliché. A Yandere girlfriend is a nice and loving persona who can become obsessive and even abusive toward their significant other.

Yander AI Girlfriend Generator program gives a unique and delightful virtual girlfriend experience for individuals who prefer this sort of character. Using powerful NLP and ML algorithms, the program replicates human dialogue and gives a tailored experience.

Features

- To imitate human interactions with its users, the program employs powerful Natural Language Processing (NLP) and Machine Learning (ML) methods.

- It even offers customizable avatars for a more personalized experience.

- Yander AI Girlfriend Simulator is an intriguing relationship simulator for those seeking a virtual relationship.

- It’s one of the most remembered applications due to its superior technology and engaging narratives.

- Not only that, but its low price makes it accessible to practically everybody.

Pros & Cons

Pros:

- Powered by ChatGPT

- Available for Smartphones

Cons:

- Only works with Android phones

3. PicSo.ai

PicSo is one of the greatest AI girlfriend apps; it uses neural networks and deep learning techniques to make realistic and visually appealing photographs of females.

Another remarkable feature of PicSo is its versatility in terms of customization options. Users may change many parameters, including the color palette, amount of detail, and overall aesthetic style. This allows users to generate unique and customized pictures that reflect their preferences and creative ideas.

In comparison to other AI girlfriend applications on the market, PicSo is notable for enabling NSFW AI-generated photographs.

Features

- PicSo is one of the most powerful AI girlfriend applications available, employing cutting-edge neural networks and deep learning algorithms.

- PicSo also provides users with a plethora of customization options, allowing them to create unique photographs that showcase their preferences and ingenuity.

- You may design the ideal image to represent your hobbies and style, from the color palette to the level of detail.

- PicSo also supports NSFW photographs, which makes it more adaptable than other AI girlfriend applications.

Pros & Cons

Pros:

- Simple to Use

- Various AI girlfriends to choose from

Cons:

- No free version

4. CoupleAI – Virtual Girlfriend

CoupleAI is available on the Google Play Store, and it’s an AI-powered girlfriend chatbot. The app provides users with a personalized and engaging experience by using strong NLP and ML algorithms that mimic human interaction.

Users can personalize their virtual girlfriend’s look and personality. You can also find many activities and games on CoupleAI. So, they can communicate and interact with their virtual girlfriends by joining activities, such as watching movies, playing games, and going on virtual dates.

Maybe you will be interested: Best Waifu Diffusion Prompts You Should Not Ignore

Features

CoupleAI is built using advanced NLP and ML algorithms that mimic human interaction, so you can anticipate genuine dialogue and personality from your virtual partner.

Pros & Cons

Pros:

- Numerous Activities with the AI Girlfriend

- You have complete control over her appearance and personality

- There is a mobile app available

Cons:

- There is no free trial

5. RomanticAI

RomanticAI is an AI-powered virtual girlfriend program that provides customers with a romantic and personalized experience. Using strong NLP and ML algorithms, it replicates human interaction and gives a realistic girlfriend experience.

Users may customize the look, behavior, and hobbies of their virtual girlfriend. They can also write love notes, go on virtual dates, and take love quizzes with their virtual lover using RomanticAI.

Features

- You have complete control over how your virtual partner looks, talks, and interacts with you, allowing you to build the kind of discussion and entertainment you want.

- You may effortlessly exchange love messages, go on virtual dates, take love quizzes, and discuss your ideas.

- With RomanticAI’s tailored interaction skills, you won’t feel separated from your artificial companion: their replies will be as natural as a genuine discussion!

Pros & Cons

Pros:

- You have total control over your AI girlfriend

Cons:

- A little hard to understand

6. Smart Girl: AI Girlfriend

Smart Girl is a virtual AI girlfriend on Google Play Store. Using contemporary NLP and ML techniques, it replicates human dialogue, offering users a tailored and engaging experience.

Users may customize their virtual girlfriend’s appearance and personality. Smart Girl also has a number of features and capabilities, such as sending personalized messages, creating reminders, and giving daily affirmations.

Features

- Smart Girl: AI Girlfriend is a virtual partner and personal assistant powered by AI. It aims to improve your digital experience. It provides engaging discussions and interactions that go above and beyond the capabilities of a traditional chatbot.

- To build a personalized digital partner, you may tweak your AI Girlfriend‘s look, speech, and personality. Smart Girl, in addition to being a delightful AI girlfriend, is also a productivity partner, assisting you in being organized and productive.

- It’s always learning from your interactions, resulting in a more rich and customized experience over time. The importance of privacy and security is emphasized, with encrypted data and chats to protect your privacy.

- Smart Girl is also bilingual, proficient in several languages, making it easier to communicate with, no matter what your linguistic background is. To improve the user experience, the app is continuously updated with new features, upgrades, and content.

Pros & Cons

Pros:

- Personalized service

- Learning and development

- High levels of privacy and security

- Languages that are multilingual

- Updates on a regular basis

Cons:

- Some users have expressed problems with off-screen objects and slowness.

- Some customers felt disappointed since further in-app purchases required access to certain functions.

7. My Virtual Girlfriend Julie

Julie is a virtual girlfriend app powered by AI that is available on the Google Play Store. The software simulates human communication using modern NLP and ML algorithms, providing users with a tailored and engaging experience.

Users may customize the look, conduct, and hobbies of their virtual girlfriend. Julie also has many features and capabilities, including personalized greetings, jokes, and games.

Features

- You may build a connection with Julie that looks and feels like your perfect counterpart! And, because of her customizable characteristics, you can experience almost an infinite number of options, ranging from personalized greetings to jokes and games.

- Julie, who is unfailingly loyal and empathetic, is always eager to chat and deliver an entertaining conversation experience.

- My Virtual Girlfriend, as more than simply a companion or friend, brings you closer to your ideal form of relationship, allowing you to experience feelings you may never have had before.

Also read: 5 Best AI Art Generator Anime – Outstanding Features, Comparison and Price

Pros & Cons

Pros:

- Available on mobile

- Simple to Use

Cons:

- There is no free trial plan

Conclusion

Having a virtual girlfriend may be a fun and rewarding experience.

For anybody wishing to enjoy a relationship without commitment, these AI girlfriend apps provide lifelike conversation, unique avatars, configurable features, and intriguing activities.

Try out one of these interesting applications today to find your ideal virtual girlfriend experience!

The post 7 Best AI Girlfriend Generators 2023 – Key Features, Pros&Cons and Pricing appeared first on OpenDream.

]]>Continue readingHow to Use Craiyon AI: Step-by-Step Guide

The post How to Use Craiyon AI: Step-by-Step Guide appeared first on OpenDream.

]]>Today, in this article, let’s explore everything you need to know about Craiyon AI.

What is Craiyon AI?

Craiyon is an AI image generator that can generate images from any language input. It’s a free program that converts plain-language text to graphics using artificial intelligence and machine learning.

Boris Dayma created Craiyon AI image generator as a free text-to-image AI tool as an alternative to the same authors’ DALLE mini. The AI was given a photo library and text descriptions until it learned to correlate words with forms and color combinations, line thicknesses, perspective discrepancies, and other artistic styles.

Craiyon can create images of varied quality and style, and it includes a collection of existing images, so you can enhance your searches. It’s trained using the Google PU Research Cloud (TRC) and is free for non-commercial uses, although it is sponsored by advertisements.

How Does Craiyon AI Work?

People may think Craiyon AI as a picture blender. When you give it a suggestion like “Give me a rabbit eating carrots,” it blends components from the training data to make a mashup image.

Craiyon AI drawing uses artificial intelligence to give greater context. It’s trained on a large number of photos, each of which is labeled with a distinct sentence. When you enter a prompt, the program generates a set of alternatives based on a combination of picture inputs from its training data.

Craiyon AI is built on a deep neural network, which is a machine learning algorithm capable of detecting patterns in data and generating predictions based on those patterns.

Craiyon AI Key Features

- Craiyon AI stands out for its simplicity when compared to other AI programs such as Starry AI, Jasper Art, Midjourney, and Deep Dream Generator. Here are some outstanding features:

- Craiyon AI always returns 9 unique photos based on your request.

- With silly and playful images: More whimsical than professional in nature.

- You can choose many styles, such as painting, drawing, photograph, or none at all. You can also use “negative” phrases to eliminate particular features from the created images, such as people or buildings.

Craiyon AI Pricing Options

- Free Plan

- Supporter Tier: $60/year or $6/month

- Professional Tier: $240/year or $24/month.

It’s important to note that while paying for a membership gives you access to more capabilities, it doesn’t always improve the quality or style of the created images.

Pros and Cons of Craiyon

Let’s take a deeper look at the benefits and drawbacks of Craiyon’s AI tool:

Pros:

- Free: Craiyon’s AI tool is free to use, allowing users to study and experiment without payment required.

- User-friendly: The tool’s UI is clear and easy to use, making it accessible to all users.

- Craiyon allows you to produce as many sets of 9 images as you want, giving you a plethora of creative options.

Cons:

- Image quality is poor: The produced images are not of especially high quality, and they frequently have a whimsical or humorous look rather than a realistic one.

- Production delay: The 1-2 minute wait time for one image generation might be inconvenient, especially if you want to experiment with other styles or modify the results.

- Given these constraints, you may want to examine alternate AI art generators if you need to create high-quality AI graphics.

Conclusion

Craiyon AI is an artificial intelligence picture generator that allows users to create unique and entertaining graphics based on simple language prompts. While it does not generate extremely professional results, it does have an easy-to-use interface and a free unlimited plan.

Users can have access to extra features and quicker image generating times by upgrading to a premium membership.

Craiyon AI might be a terrific solution if you’re looking for a tool for fun and whimsical AI image generating. However, if you demand more advanced and realistic AI-generated graphics, we recommend you should look at other options.

The post How to Use Craiyon AI: Step-by-Step Guide appeared first on OpenDream.

]]>Continue readingMidjourney 5: The Ultimate Guide

The post Midjourney 5: The Ultimate Guide appeared first on OpenDream.

]]>Today, let’s have a look at what’s new with the V5 basic model!

How To Use Midjourney 5

If you’ve never used MidJourney before, you can learn how to get started here:

There are two options for those of you who are currently using MidJourney to begin using V5:

- Enter “/settings” and choose “MJ Version 5”:

You are now ready to begin prompting with V5! You can return to the previous versions at any time by following the same steps.

- You may skip the above settings and just append ” — — v 5″ to the end of each prompt: (The current default mode is V4 if you do not specify.)

Outstanding Features of Midjourney 5

Midjourney version 5 introduces a few significant new features:

- Excellent User Input Responsiveness and Language Processing

V5 is substantially more ‘unopinionated’ than V3 and V4. It has been tweaked to offer a wide range of outputs while being very sensitive to user input, and natural language processing has been enhanced.

- Significantly improved image quality

The photos in Midjourney V5 are substantially greater resolution. V5’s default resolution is 1024*1024 pixels, which is the same as the upscaled images of V4.

- More Realism fewer ‘opinionated’

The V5 model can provide more realistic visuals than any other model MidJourney has ever published. The V5 model, while less ‘artistic’ than the V4 photos, allows users significantly more influence over the final image, as image details are extremely sensitive to what the prompts specify.

- Greater Stylistic Variety

Don’t be concerned that Midjourney v 5 will lose the ‘imaginary’ and ‘creative’ artwork features that V4 did; with the ‘stylize’ option and the associated descriptions in your prompts, V5 becomes even better with the details while maintaining the ‘artistic’ feel.

- Improved ‘Remix’ Function

‘Remix’ is a function in MidJourney – -v 5 that allows you to input two photographs and have them combined.

When you produce new images based on your image reference, you may now specify exactly how much effect your image reference should have on the entire question by putting ” — — iw” with the ratio of 0.5/1/2 after the prompts.

- Return of the Tile Parameter

Users can use the tile option to create seamless patterns and textures. A “tiled” backdrop image is a little image that is meant to repeat itself horizontally and vertically.

Comparing Midjourney 4 and 5

There are a few more significant differences between Midjourney v4 and Midjourney v 5. Let’s explore!

Making Individual and Realistic People From Scratch

One of the main draws for Midjourney users is it can build lifelike people from simple text inputs. For these sorts of requests, the changes between Midjourney v4 and v5 are rather striking.

Also read: Midjourney Discord: Step-by-Step Guide to Generate AI Images

Creating Celebrities

Similarly, when it comes to prompts involving celebrities, Midjourney 5 outperforms its predecessor, Midjourney v4.

Resolution and Upscaling Speed

Resolution increases and quicker processing times for upscaling are two more noticeable differences between Midjourney v4 and v5. The increased resolution may be observed in both the details (particularly the textiles) and the file sizes.

Typical Objects

Objects gain realism as well, if that is the intention. Everyday items, like hands, look to be an Achilles Heel for Midjourney at times. When it came to a basic command like “a hammer,” neither version performed particularly well.

If reality is the aim, one may argue that any of these outcomes is superior to the other. Midjourney v5 will outperform v4 when more explicit prompts are used.

Complicating Prompts

The greatest Midjourney outcomes are generally the product of a thoughtful and properly worded prompt. Aside from using weights and added modifiers to assist develop a picture, it appears that cramming a lot of descriptions separated by commas is a best practice that many experienced Midjourney users adhere to.

Maybe you will be interested: 99+ Best Midjourney Prompts That Will Surprise You

Some Drawbacks That Haven’t Been Improved

Overall, we’re pleased with the image quality and new capabilities of V5, but we’ve noticed that there are still certain flaws that existed in previous versions and have not been addressed by V5. Here are a few of them:

- Images with words

It’s still impossible using MidJourney to produce visuals that incorporate meaningful phrases.

- Strange hands

MidJourney has had a difficult time getting the hands and teeth just correct for some reason. The images created frequently have too few/too many fingers/teeth.

- The prompts cannot provide all of the necessary information

To be honest, V5 has made significant progress in comprehending prompts using natural language, but we have encountered several instances when attempting to utilize different versions of prompts, and MidJourney is still unable to capture all of the subtleties in my prompts.

- It can be annoying at times, but we’re certain that it will improve over time.

Conclusion

With so many new and enhanced features, Midjourney 5 is unquestionably a huge step forward. Some may worry that we’ll have to change what we’ve learned so far with each new edition of MidJourney. Well, perhaps.

However, we believe that the language we learned does not perish but rather grows. All of the preceding abilities and tactics are valid in previous editions, and acquiring new talents is always enjoyable, don’t you think?

In this tremendously fast-paced AI environment, if you stop learning, you will fall behind. Let’s keep learning together, and we can’t wait to share all the tips and techniques we discover along the way!

The post Midjourney 5: The Ultimate Guide appeared first on OpenDream.

]]>Continue reading7 Best Midjourney Alternatives to Try in 2023 (Free&Paid)

The post 7 Best Midjourney Alternatives to Try in 2023 (Free&Paid) appeared first on OpenDream.

]]>In this post, we’ve organized a list of 7 greatest Midjourney alternatives, along with their benefits and drawbacks. So, you can have a better idea of which tool would best fit you and your needs.

1. Freepik AI Image Generator

Freepik AI Image Generator is a free-to-use creative platform that provides a user-focused alternative to Mid Journey, offering pioneering features, including its highly intuitive AI image generator, powered by progressive machine-learning algorithms.

Leaving nobody behind, visitors to the website are presented with a beginner’s guide, an experience in itself, explaining what’s needed to achieve the best results when writing their first prompt.

Once you have written a description and selected from its growing list of styles, it only takes a few seconds for your imagination to come to fruition.

What’s most impressive about this feature is taking your AI image into Freepik’s very own in-browser editing tool, supported by its colossal asset library. You can quite literally design on the go.

Key Features

- An in-depth guide to generating stunning AI-generated content

- A growing selection of styles

- Vast customization options

- An in-browser editing tool (premium users only)

- Access to Freepik’s huge asset library via the editing tool

Pros and Cons

Pros:

- Free to use

- Simple to use

- Provides a selection of styles

- Can generate images in a matter of seconds

- Creates four images at a time

- Lots of customization options

Cons:

- You need to sign up to become a user

- Free usage is limited

- Premium users have full access to the feature

2. Stable Diffusion

If you want a trustful MidJourney replacement, we highly suggest Stable Diffusion. Stable Diffusion is created by Stability AI and trained on billions of images. It is capable of creating outcomes equivalent to those obtained with MidJourney.

Key Features

- It lets you create pictures from words and edit images depending on text

- It provides a wide range of customizing choices and parameters for creating distinct AI picture styles, making it a fantastic Midjourney alternative

- It’s a free Midjourney alternative, and you can install and run it on your computer

Pros&Cons

Pros:

- Open-source and free Machine learning model

- Quick AI picture creation

- Multiple times image creation

- High-quality results

- Flexible editing options for the produced AI pictures

Cons:

- Less user-friendly interface for beginners

- Before you begin, you must first learn a few things

3. DALL-E 2

DALL-E 2 is a product of Open AI, the same team that created ChatGPT, a top natural language learning model that has lately received a lot of attention from the media. This is a Midjourney AI alternative that is worth trying.

Key Features

- Dall-E creates an original picture using AI and word input

- It allows you to increase the size of generated images

- DALL-E 2 anticipates and expands your image to create a full scenario that fits your original image flawlessly

Pros&Cons

Pros:

- High-quality results

- There are several picture variants

- AI inpainting and outpainting capabilities allow you to add or edit items in an AI image, as well as expand the image’s bounds

Cons:

- It may take some time to sign up

4. OpenDream

OpenDream is a user-friendly Ai Art Generator tool that helps people of all levels. Its major goal is to democratize graphic design by making it available to everyone, regardless of experience or expertise.

With powerful AI algorithms, OpenDream can produce realistic visuals depending on user input. Once the picture is made, users may download it in different formats and use it for personal or business projects.

Key Features

- OpenDream has a wide range of image categories, including landscapes, objects, people, and animals, among others.

- With OpenDream AI, you can play with many artistic templates and apply them to your image.

Pros&Cons

Pros:

- Cost-effective

- Continuous Improvement

- With simple instructions, you can quickly and easily create a picture

- Excellent image quality

Cons:

- Due to the recent introduction, functionalities are limited; enhancements are required

Also read: Chat GPT Image Generator – Detailed Instructions and Reviews

5. Nightcafe

Again, NightCafe is not a picture model itself, but rather an internet service that provides access to many text-to-image models. Stable Diffusion (including the newest SDXL model), DALL-E 2, CLIP-Guided Diffusion, VQGAN+CLIP, and Style Transfer are all supported.

Style Transfer may be used to make masterpieces based on previous artworks; you can use CLIP-Guided Diffusion to create creative images; and VQGAN+CLIP to build gorgeous scenery.

For next-generation picture synthesis, there are the Stable Diffusion and DALL-E 2 models. However, the website is not fully free to use. You only receive three free credits when you sign up.

To use further sophisticated models, you either purchase or earn credits through social marketing. By the way, NightCafe is another tool that allows you to compare several picture models.

Key Features

- It has more algorithms than most other generators

- You provide a wide range of customizing options for power users

- Hours of community service can be applied toward graduation requirements

- There are numerous possibilities for social contact, and the community is vibrant and friendly

- Creating groups of comparable items is a convenient method to keep track of your creations

- You can download all images at once

- You have authority over the production of films

- It’s possible to obtain some prints of your work

- The abundance of added features distinguishes NightCafé from its competitors

Pros&Cons

Pros:

- It is simple to get started; No email sign-up or a credit card required

- There are online and app versions available

- A thriving AI art community where you can find countless AI masterpieces and language prompts for inspiration

Cons:

- Poor resolution final products for free version, you must pay to get high-resolution images.

- It may take some time to create images

6. Craiyon

Craiyon, formerly known as DALL-E Mini, is a free online AI platform that allows you to generate AI images fully for free! It’s really simple to use and doesn’t even require you to sign up to get started.

Enter your prompt, and Craiyon will produce 9 distinct graphics depending on your specifications.

Craiyon does not allow you to choose art styles, yet the generated images are nonetheless beautiful and diversified. So, if you’re looking for some interesting AI image generators to play with, give Craiyon a go.

Key Features

- Easy to use

- Text to Image Generation: Using AI, Craiyon creates an original image with only text input

Pros&Cons

Pros:

- There is no need to sign up

- It’s completely free to use, and you may make as many AI pictures as you like

- Create 9 AI pictures each time

Cons:

- Ads

- Styles and customizing choices are limited

- When compared to other AI picture producers, outputs may be slightly lower

7. Jasper Art

One of the better Midjourney options is Jasper Art. It is a free, AI-powered art generator that allows users to create their own digital artwork from the ground up. The company uses cutting-edge artificial intelligence painting technology to quickly develop a range of styles that are fun to explore.

Given that this is an AI art generator, you may need to be creative with your prompt language to create anything meaningful, and the results may be surprising.

You can create anything from image realism to cartoons and more with the help of Jasper Art, a sophisticated AI art generator. Before it makes completely customized artwork, Jasper Art requires you to enter a few words.

Key Features

- AI is used to generate unique visuals in response to human input

- There are several languages you can select. As a result, images in languages other than English can be created

- If you have any queries or encounter any problems while using the program, you may get help through a support chat

- Copywriting templates can also be used to generate artwork in Jasper

Pros&Cons

Pros:

- Simple to use

- Quick picture creation

- There are several styles and alternatives available, such as pencil drawing, cartoon style, close-up 3D render, digital illustrations, and so on

- Each time, 4 images are created

Cons:

- Faces may look distorted sometimes

8. StarryAI

One of the most interesting features of StarryAI is that you have total control over the images it produces, so you can use them for personal or commercial purposes.

Its key selling point is the ability to generate free NFTs. Although technology is always advancing, software has already produced some breathtaking works of art.

Key Features

- It is computer-generated image creator

- No user’s input required

Pros&Cons

Pros:

- A diverse choice of AI art styles and customizing possibilities

- Support both web and mobile apps

- Use of existing images as a reference

- 5 free AI artwork creations without watermarks each day

Cons:

- Image creation may take some time

Conclusion

Other than Midjourney, there are several AI art generators available. If you want to try something new, choose one of the Midjourney choices suggested above. You may expedite your design and creation process by harnessing the power of AI image-generation technologies.

We hope this information helps you gain a lot of clarity and locate a perfect Midjourney alternative that meets your needs and requirements!

The post 7 Best Midjourney Alternatives to Try in 2023 (Free&Paid) appeared first on OpenDream.

]]>Continue readingMidjourney vs Stable Diffusion: Which Is The Best For You?

The post Midjourney vs Stable Diffusion: Which Is The Best For You? appeared first on OpenDream.

]]>So, is it worthwhile to pay for Midjourney? How does it differ from Stable Diffusion?

QUICK ANSWER

The differences between Stable Diffusion vs Midjourney:

- Midjourney has an exclusive machine learning model, whereas you can access Stable Diffusion freely.

- Stable Diffusion can be downloaded and run on your own computer if it fits the prerequisites. Midjourney is only available if you have a stable internet connection.

- You can access Midjourney using the Discord conversation app. Stable Diffusion, on the other hand, can only be accessed through a number of online and offline programs.

- For restricted image production, Midjourney costs a minimum of $10 per month. You can run Stable Diffusion freely on your hardware or pay a charge to use internet services.

- Stable Diffusion may be used to fill or change just specific areas of a picture. Midjourney now features inpainting and outpainting using the Zoom Out button as of June 2023.

- Stable Diffusion provides thousands of downloadable custom models, whereas Midjourney only has a few to pick from.

- Midjourney may appear to be easier to use than Stable Diffusion since it has fewer parameters. The latter, on the other hand, has much more extensive features and customization choices.

Keep reading to find out more about the differences between Midjourney and Stable Diffusion. We’ll also give out image comparisons with the identical text prompt to evaluate which one performs better.

1. The Differences between Midjourney vs Stable Diffusion

| Stable Diffusion (AUTOMATIC1111) | Midjourney | |

| Image Customization | High | Low |

| Ease of getting started | Low | Medium |

| Ease of generating good images | Low | High |

| Inpainting | Yes | No |

| Outpainting | Yes | No |

| Aspect ratio | Yes | Yes |

| Model variants | ~1,000s | ~ 10s |

| Negative prompt | Yes | Yes |

| Variation from a Generation | Yes | Yes |

| Control composition and pose | Yes | No |

| License | Permissive.

Depends on the model used |

Restrictive.

Depends on the paid tier |

| Make your own model | Yes | No |

| Cost | Free | $10-$60 per month |

| Model | Open-sourced | Proprietary |

| Content Filter | No | Yes |

| Style | Varies | Realistic illustration, artistic |

| Upscaler | Yes | Yes |

| Image Prompt | No | Yes |

| Image-to-image | Yes | No |

| Prompt word limit | No limit | No limit |

“While Midjourney is easier to use, Stable Diffusion provides plenty of options for both beginners and experienced users.”

2. Midjourney vs Stable Diffusion: Features

At first sight, Midjourney and Stable Diffusion seem to have the same feature set. However, each image generator has its own set of advantages and disadvantages.

Consider upscaling, which you could previously do in Midjourney using the U1, U2, U3, and U4 buttons. That is no longer feasible because Midjourney’s most recent model does not yet support any upscaling models.

In contrast, the open nature of Stable Diffusion allows you to download and experiment with various upscaling models. You can also make higher-resolution images than Midjourney’s current maximum of one megapixel if your computer has enough video capacity.

Maybe you will be interested: 99+ Best Midjourney Prompts That Will Surprise You

Here’s a summary of more differences between Midjourney and Stable Diffusion:

- Inpainting and outpainting: With Stable Diffusion, you can use inpainting to make changes to existing images. Similarly, outpainting allows you to add additional information outside of the limits of an existing image.

Midjourney has added these editing tools to each generation via a new Zoom Out button. However, if you want more customization, we recommend checking out Photoshop’s new Generative Fill function.

- Prompts for images: Midjourney allows you to include an image (or two) as part of your prompt. The bot will mix the image and your words to create an output that looks similar to the input.

- Custom art styles: The –niji argument invokes an anime-optimized model from Midjourney. Stable Diffusion, on the other hand, allows you to download unique models trained in a wide range of creative styles, from realistic to origami.

- Censorship: While the official base Stable Diffusion models don’t enable you to produce explicit images, bespoke models can get past these constraints. Midjourney does not allow this, and your account may be banned if your suggestions contain sexual or provocative language.

Remember that you need to use Stable Diffusion on your own hardware to get the most out of it. Because online solutions don’t provide the same amount of versatility, Midjourney is equally as competent as Stable Diffusion.

3. Midjourney vs Stable Diffusion: Pricing Plan

Midjourney, being a for-profit firm, has some limitations on how frequently you may use it. Even if you pay for a Midjourney subscription, you only get a certain number of free image production hours per month.

The $30 and other subscription plans include infinite hours of easy mode, however, each activity will take several minutes to complete. Furthermore, there is no free tier or trial period.

Stable Diffusion works differently. Its source code is publicly accessible for download, so you may use it for free. You will, however, require a powerful computer with a dedicated graphics card. Most models require at least 4GB of VRAM, thus a recent gaming PC will suffice.

However, if you don’t already have one, this type of gear will cost you more than a thousand dollars. Fortunately, you can run Stable Diffusion online or, if you like something adventurous, you can use it in a cloud-based virtual machine like Google Colab.

>>> “Stable Diffusion’s free plan is available, but you have to make some effort.”

4. Midjourney vs Stable Diffusion: Which is the Best for You?

I’m not going to be diplomatic, but it truly depends on what you’re looking for.

Midjourney has a distinct style that includes great contrast, superb lighting, and realistic artwork. It’s quite simple to make photos with insane amounts of great detail. You can get good photographs without putting in much effort.

Even though you input a specific prompt, it’s not sure that you can get the exact result you want with Midjourney.

Stable Diffusion, on the other hand, may produce equal or superior pictures, but it takes a bit more expertise. So, if you’re looking for a challenge and want to get deep into the technical things, Stable Diffusion is the right fit.

Conclusion

I hope this post helps you understand the differences of Midjourney vs Stable Diffusion. If you can afford your time and budget, you should try both tools. Both will most likely have a role in your workflow. We actually tried both and feel quite excited about the challenge of making one’s image with the other.

The post Midjourney vs Stable Diffusion: Which Is The Best For You? appeared first on OpenDream.

]]>Continue readingMidjourney Discord: Step-by-Step Guide to Generate AI Images

The post Midjourney Discord: Step-by-Step Guide to Generate AI Images appeared first on OpenDream.

]]>Midjourney, unlike Stable Diffusion and DALLE 2, cannot be installed on your own computer or used through a well-designed web app. It is only available through Discord.

Yes, Midjourney can only be accessed via a chat app.

However, its quality is well worth the risk. Here’s a quick rundown on how to use Midjourney, keep reading for more information:

|

1. Sign up for Midjourney discord

Discord is a messaging tool that is similar to Slack in some aspects, although it was primarily developed for gamers seeking to coordinate strategies while playing multiplayer online games, such as League of Legends and World of Warcraft.

It’s extremely popular among gaming communities and other artistic and hobby circles. Midjourney uses Discord for a purpose, even if it looks a little complicated from the outside.

All of this being stated, before you can even use Midjourney, you must first create a Discord account. It’s completely free, so go to Discord’s website and create a new account if you don’t already have one.

2. Sign up for Midjourney

Once you’ve created an account on Discord, go to the Midjourney website and Join the Beta. This will prompt you to accept an invitation to the Midjourney Discord channel. Accept the invitation, and you’re in.

| Midjourney suspended free trials in late March 2023 because some individuals abused the system. They aim to return them, but there is presently no timetable.

Previously, you can get around 25 free images with free trial (limited to 0.4 hours of GPU time) under a Creative Commons BY-NC 4.0 non-commercial license. When free trials return (or if they’ve already returned by the time you read this), you can skip the next step—at least until you’ve used up your free images. |

- To sign up for a Midjourney plan, go to one of the newcomer rooms (such as #newbies-14 or #newbies-44).

- In the message area, type /subscribe and click enter or return. It’s like a slash command, and it is how you communicate with Discord bots such as Midjourney. You’re just informing Midjourney Discord that you’d want to subscribe.

- This will take you to a page to sign up for a paid Midjourney plan.

The Basic Plan costs $10 per month, with 200 image creations each month, and the Pro Plan goes up to $60 per month, which includes 1,800 AI-generated images.

Return to Discord after you’ve signed up. It’s time to get started.

Also read: 99+ Best Midjourney Prompts That Will Surprise You

3. Generate your First Image by Midjourney Discord

Midjourney is intended for usage by a community of artists (thus the use of Discord), although the #newbies channels may be somewhat turbulent.

There are several folks who are continuously submitting suggestions and requests. (Scrolling through the various channels is an excellent method to discover what works and what doesn’t.)

You can submit orders to the Midjourney Discord bot via direct messages if you’re a premium user. They will remain publicly available in the member’s gallery by default; if you want to make private images, you must subscribe to the most expensive Pro plan.

For the time being, let’s keep working on the #newbies channel. If you’ve paid for a plan and need a quieter workspace, click the Midjourney Bot and send it a direct message.

Enter /imagine in the message box, followed by a text prompt and a return key. You may put in whatever you like, but here are a few suggestions to get you started:

- Impressionist painting of a Canadian guy riding a moose in a maple grove.

- Vermeer’s painting of an Irish wolfhound drinking a pint in a typical Irish pub.

- A mermaid swims in a green kelp forest surrounded by fish in this hyper-realistic rendering.

- After about a minute, based on your prompt, you will have four variants of your prompt.

4. Edit Images With Upscaling And Variations

There are eight buttons beneath each set of images you generate: U1, U2, U3, U4, V1, V2, V3, and V4.

- The U buttons enlarge the selected image, resulting in a new bigger rendition with additional information.

- The V buttons generate four additional versions of the selected image that are stylistically and aesthetically similar to it.

- A Re-roll option is also available, which will re-run your prompt and create four new images.

All of these buttons allow you to fine-tune and better control the images produced by Midjourney AI Discord.

Conclusion

Of course, the actual power comes from Midjourney Discord’s advice. Learning how to write successful prompts is currently the true key to getting decent results from all generative AI technologies.

Midjourney is one of the most straightforward AI image generators. Its default style is noticeably more creative than DALLE 2, so even simple instructions like “a cow” can provide nice results.

Midjourney AI Discord is still in beta and being actively developed. The sixth edition is currently being tested, and the results are astounding. Hopefully, developers will add capabilities that allow Midjourney to integrate better as part of a workflow over the following several months.

The post Midjourney Discord: Step-by-Step Guide to Generate AI Images appeared first on OpenDream.

]]>